Introducing Stable Cascade: The future of image generation

Stable cascade is a new diffusion model generating images from text descriptions. It's developed by Stability AI, the developer of Stable Diffusion and is known for being faster, more affordable, and potentially easier to use than previous models like Stable Diffusion XL (SDXL).

Stable Cascade distinguishes itself from the Stable Diffusion series by incorporating a trio of interconnected models—Stages A, B, and C. This structure, built upon the Würstchen architecture, facilitates a layered approach to image compression, delivering superior outcomes using a highly compact latent space.

Here's a breakdown of how these components interact:

Stage C, the Latent Generator phase, converts input from users into dense 24x24 latents, which are then forwarded to the Latent Decoder phase, consisting of Stages A and B. Unlike the VAE in Stable Diffusion which compresses images, Stages A and B achieve even greater compression levels.

Separating the process of generating text-based conditions (Stage C) from the conversion back to high-resolution imagery (Stages A & B) not only enhances flexibility but also dramatically reduces the resources needed for training or fine-tuning.

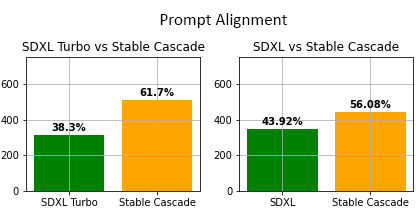

In this section, we embark on a comparative analysis of Stable Cascade and SDXL, each a sophisticated model in the realm of image generation. Our objective is to outline the unique qualities and strengths that set Stable Cascade apart from SDXL. We aim to cast a light on how Stable Cascade stands out in terms of performance, efficiency, and its capacity to produce high-quality images based on text prompts.

According to Stability AI's research, Stable Cascade demonstrates a slight advantage in adherence to prompts compared to SDXL, aligning more closely with the specified instructions. Our evaluations corroborate these findings, revealing that Stable Cascade excels in generating images that more accurately reflect the requested scenarios, particularly in the creation of realistic portraits and landscapes.

This enhanced fidelity and prompt alignment underscore Stable Cascade's potential in producing high-quality visual content that meets specific creative demands.

Again, reflecting on Stability AI's findings, Stable Cascade showcases a slight improvement in its ability to follow prompts more precisely than SDXL.

Our own testing supports this observation, revealing that while both models perform closely, Stable Cascade slightly edges out in prompt adherence. This is particularly evident when examining the generated images side-by-side, where Stable Cascade's outputs are more faithfully aligned with the given prompts, illustrating its capability to capture the nuances of the request with greater accuracy (e.g: ‘soundwaves forming a heart’).

This increment in prompt fidelity highlights Stable Cascade's advanced image generation capabilities, making it a noteworthy option for generating visual content that demands strict adherence to user specifications.

When comparing the capabilities of Stable Cascade and SDXL in text generation, it's clear that Stable Cascade significantly surpasses SDXL. This assertion is evident from the examples provided below, where Stable Cascade demonstrates a superior proficiency in interpreting and executing on text prompts.

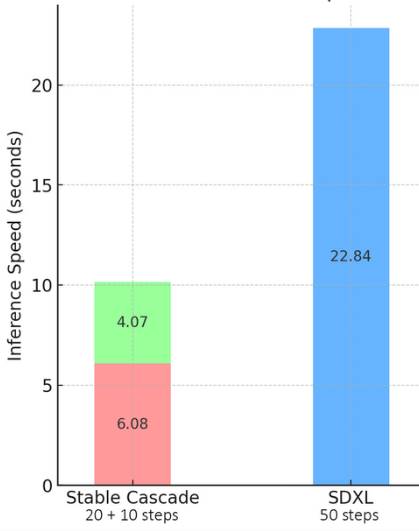

According to a study by Stability AI, Stable Cascade achieves image generation speeds more than twice as fast as SDXL.

In our own testing (see generated images in the previous sections), to achieve optimal results, images with a resolution of 1024x1024 were generated using the following parameters:

This significant improvement in speed with Stable Cascade not only enhances efficiency but also underscores its potential for applications requiring rapid image generation without sacrificing quality.

Although we haven't directly tested it, the architecture of Stable Cascade is designed to facilitate efficient customization and fine-tuning, particularly within its Stage C. This feature is intended to make it simpler for users to adapt the model to specific artistic styles or to incorporate ControlNet functionalities. This flexibility contrasts sharply with the more complex training requirements of models like SDXL, marking Stable Cascade as a potentially more accessible platform for creative and technical modifications.

This streamlined approach has the potential to significantly ease the process of model training. In particular, by concentrating adjustments on Stage C, it's possible to achieve up to a 16-fold reduction in training costs compared to similar efforts with Stable Diffusion models, making the adoption of custom styles and functionalities both more accessible and cost-effective.

With the Ikomia API, you can effortlessly generate images with Stable Cascade with just a few lines of code.

To get started, you need to install the API in a virtual environment [2].

You can also directly charge the notebook we have prepared.

Note: The Stable Cascade algorithm requires 17 GB of VRAM to run.

List of parameters:

- The Kandinsky series including, text-to-image, image-to-image, ControlNet & more

[1] https://stability.ai/news/introducing-stable-cascade