Revolutionizing Image Editing with Privacy-Preserving Portrait Matting

In today's world, where images are a huge part of how we interact online, there's a growing need to keep personal information safe while processing these images. This is where P3M, Privacy-Preserving Portrait Matting, comes into play, offering a smart solution that combines advanced deep learning techniques with the crucial need for privacy.

P3M portrait matting is all about doing something quite complicated—separating a person in a photo from the background, a process known as matting, without giving away who they are. It's a clever way of making sure people can enjoy the benefits of modern image editing without worrying about their privacy being compromised.

P3M, Privacy-Preserving Portrait Matting, is an innovative approach that combines the power of deep learning with the necessity of protecting individuals' privacy in digital images. It specifically addresses the challenge of separating a portrait subject from its background (matting) without compromising the individual's identity.

Privacy-preserving methods in image processing and computer vision aim to anonymize personal information in images, such as faces, without degrading the quality of the task at hand, such as matting. These methods involve techniques like blurring, pixelation, or generating synthetic data that maintains the utility for specific tasks while ensuring privacy.

The architecture of P3M portrait matting integrates a novel approach through its unique multi-task framework, leveraging the synergy between a segmentation decoder and a matting decoder, anchored by a shared encoder. This structure is pivotal in achieving high-quality portrait matting results without compromising individual privacy.

Let's delve into the detailed components and functionalities that make up the architecture of P3M portrait matting:

The foundation of P3M portrait matting's architecture is a multi-task framework that efficiently balances the intricacies of semantic segmentation and detail matting. This framework is instrumental in processing privacy-preserved images, such as those with obfuscated faces, ensuring that the privacy constraints do not impede the matting quality.

The multi-task nature allows for simultaneous learning of global image features and specific task-relevant features, optimizing the model's performance on both fronts.

At the heart of P3M portrait matting architecture lies the shared encoder, a modified version of ResNet-34 equipped with max pooling layers. This lightweight backbone is selected for its efficiency and effectiveness in capturing base visual features from the input images. The encoder serves as the foundational layer from which semantic and detail features are derived, ensuring that the essential visual cues are preserved even in the face of privacy-enhanced inputs.

P3M portrait matting employs two dedicated decoders: one for semantic segmentation and another for matting. Each decoder is structured with five blocks, comprising three convolution layers each, tailored to their specific tasks. The segmentation decoder utilizes bilinear interpolation for upsampling, focusing on the broader semantic understanding of the image.

Conversely, the matting decoder employs a max unpooling operation, leveraging the indices from the encoder to refine the detail matting with precision, capturing the fine details necessary for high-quality matting output.

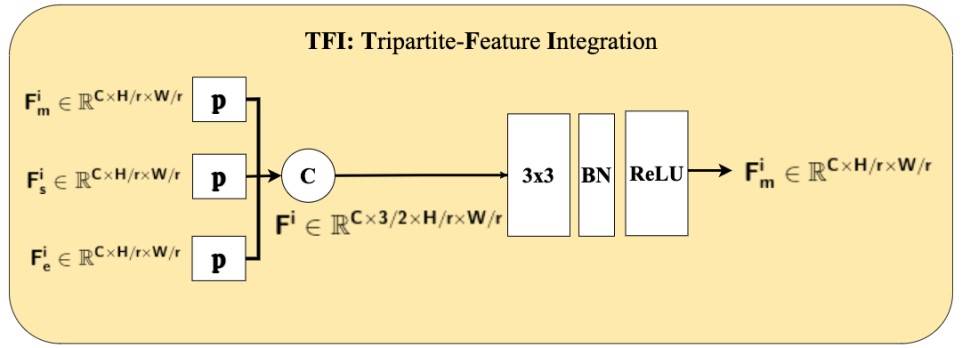

The TFI module is a cornerstone innovation in P3M portrait matting architecture, designed to integrate features from three critical sources: the previous block of the matting decoder, the corresponding block of the segmentation decoder, and the symmetrical block from the encoder.

This integration facilitates a comprehensive understanding of the image, ensuring that both global semantics and fine details are considered in the matting process. The TFI module exemplifies the model's ability to maintain the integrity of the matting results while adhering to privacy constraints.

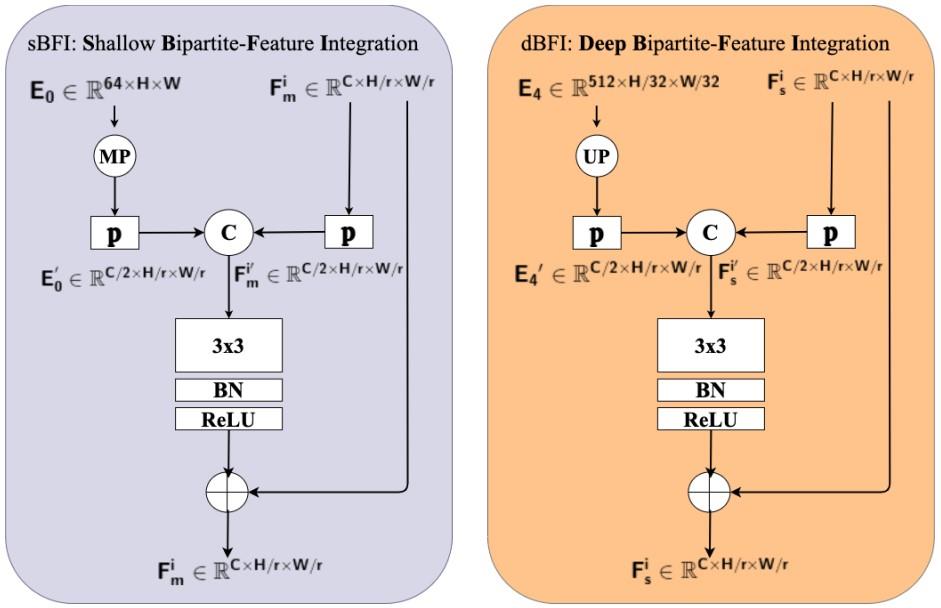

Complementing the TFI, P3M portrait matting introduces two bipartite-feature integration modules: the Deep Bipartite-Feature Integration (dBFI) and the Shallow Bipartite-Feature Integration (sBFI). These modules are tasked with leveraging deep and shallow features, respectively.

The dBFI focuses on integrating high-level semantic features with the encoder's output to enhance the segmentation decoder.

In contrast, the sBFI module uses finer details from the encoder to improve the precision of the matting decoder. These modules are pivotal in ensuring that P3M portrait matting achieves a delicate balance between preserving privacy and delivering exceptional matting quality.

The P3M-10k dataset, introduced as part of the study, is the first large-scale anonymized dataset for portrait matting designed with privacy preservation at its core.

This publicly available dataset consists of 10,000 high-resolution portrait images with face obfuscation to protect privacy, alongside high-quality ground truth alpha mattes. The dataset was created to enable the development and evaluation of matting techniques that respect user privacy.

For evaluation purposes, the dataset was divided into two test sets:

With the Ikomia API, you can effortlessly remove background on your image in just a few lines of code.

To get started, you need to install the API in a virtual environment [2].

You can also directly charge the notebook we have prepared.

List of parameters:

Throughout this article, we have explored the complexities of background removal using P3M portrait matting techniques. However, our journey doesn't stop here. The Ikomia platform expands our possibilities by offering a diverse collection of image matting algorithms, notably featuring the cutting-edge MODnet.

[1] https://github.com/JizhiziLi/P3M

[3] Portait Image – Pexels - A Bearded Man Wearing a Jacket by Chandan Suman